Mixed reality and spatial computing

with Ward PeetersDownload this episode in mp3 (29.27 MB).

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

"The next computing era is spatial computing." This is the prediction that Ward Peeters makes for the near future, and he knows what he's talking about. A fan of Star Wars and video games since a young age, Ward was mezmerised by Microsoft's public presentation of the HoloLens and has since devoted his entire career to the development of mixed reality applications.

Ward is co-founder and managing partner at Roger Roll, a dynamic competence center that focusses on advising, guiding and developing experiences in spatial computing. Roger Roll is based in the Antwerp area, in Belgium.

During this episode you will learn why spatial computing is so exciting, and the difference between virtual reality, augmented reality, and mixed reality. You will hear it from the live voice of the first guy who put his hands on a HoloLens device in Belgium, when it was only available in the United States. Ward works at the forefront of this technology and shares his knowledge on where we are today, and where we may we headed according to his predictions.

Want to learn more about mixed reality? http://www.rogerroll.com/cases/mixedreality.html

More on spatial computing: http://www.rogerroll.com/cases/spatialcomputing.html

Highlights from this episode

At minute 20:28: "There is a lot of opportunity and space for researching behind the hardware and software and... you know, what if we try something else... what if we try to scan a person in 3D and then send it over the wifi to the other side of the world, and then view it through a mixed reality again... and see that person in 3D... that's telepresence."

At minute 21:46: "I'm convinced that within 10 years this will be the next computer. The next computing era is spatial computing.

We've always seen the digital world as a separate space, because you need a laptop, you need a computer, or you need a smartphone, a tablet. You need a device that you have to take with you that you use to connect to the digital world. So it's like another dimension basically. And you use this technology to go to that place.

But I believe that shortly we will see those two places being connected, the physical and the digital. And this is what really spatial computing is about. So that means that for example, in the case of a smart glasses or even smart contact lenses, you would have access to a lot of data without really thinking, okay, I need to take my phone or I need to connect to some server, because everything will be connected to the real world layer."

At minute 24:02: "It's about thinking about digital content in a way that is not connected to a device."

At minute 29:37: "The Cloud is the future. Every device that you have or we have doesn't really have the capabilities to know if an object is a television or maybe just a computer screen, right? Because they almost look the same. But the Cloud has that capability, because the Cloud has done that a million times."

People, places, and devices mentioned in the interview

- "Live scope" VR Arcade in Brussels, Belgium (mentioned at minute 14:30): https://www.livescope.be/en/

- Unity, one of the Microsoft Application Blocks, is mentioned at minute 33:50: https://unity3d.com/partners/microsoft/mixed-reality

- Technologist Peter Hinssen is quoted at minute 45:38 about his prediction on self-driving cars: https://www.peterhinssen.com

- HoloLens: https://www.microsoft.com/en-us/hololens

- Ultrahaptics start-up: https://www.ultrahaptics.com

List of questions [my interventions] in this episode

[Federica:] Welcome to a new episode of Technoculture. I'm your host, Federica Bressan, and today my guest is Ward Peeters, co-founder and managing partner at Roger Roll in the Antwerp area, in Belgium. Roger Roll is a dynamic competence center that focuses on advising, guiding, and developing experiences in spatial computing. Welcome Ward. Today's topic is mixed reality. We also talk about virtual reality a little bit, but I understand that both these are subsets of spatial computing. So maybe a good place to start is to ask you what is spatial computing?

[Federica:] So we're talking about artificial intelligence.

[Federica:] Some say that virtual reality had like a spike, was very popular, in the 90s, and then it disappeared, and now it's sort of coming back. But these people are not excited about it. They don't believe that it will last. Do you believe that this is actually the beginning of something? So this is the future now?

[Federica:] What kind of realities are we talking about? How many do we have and how do you define reality in this context?

[Federica:] So do I understand correctly that in an augmented reality application, the system could overlay digital object on any background, it doesn't know the environment. Whereas in mixed reality, the same object is overlaid on a space that is known, so the application knows the environment - and this has consequences.

[Federica:] And besides gaming, what other applications are there for this technology?

[Federica:] No Pokemon is involved!

[Federica:] When I watch the videos that you share online or other people share online, I'm always amazed by what already exists, by what I see demonstrated in these videos. So can you tell us, where are we exactly? I mean, what are the current challenges in this field? Is it the tracking of my hand or is it the resolution of the objects? Where are we and where are we going next?

[Federica:] What do you mean then mixed reality started when Microsoft came out with that device? Haven't we seen the concept before, in sci fi movies, when holograms are floating around the room? And they do not clash with objects, so they know the room? And Tony Stark technology, floating around the room also? So how has mixed reality started with Microsoft?

[Federica:] Ok, so the science fiction became possible. How did you start in this field? Were you passionate about sci-fi, were you dreaming about this types of things too?

[Federica:] All right. These things are so new that sometimes you really need to see them to understand what we're talking about. Are there good demos, videos out there, material that I could also link to this podcast episode for our listeners?

[Federica:] If someone like me or a regular person wants to try this technology, can we go some place? Are there shops, centers, something like that where we can try it or it's not so accessible? I'm a bit of a VR freak. I have tried it anywhere I've seen it, you know, open to the public, and often times I see this disclaimer - or a warning, actually - that if you have certain conditions, you shouldn't use this application, and that you might feel dizzy. And I've heard that that's normally related to the resolution of the images. I do think that that's the case. Resolution is really a critical factor, and that's what can make you dizzy - except virtual reality applications of roller coasters: then you'd be sick even in the real world, I guess!

[Federica:] So, say, I could try an augmented reality application on my phone, and virtual reality, it's possible. What about mixed reality? Is this the least accessible?

[Federica:] What about sound? Sound has a great capability of bringing a scene to life. But sound needs to behave in a way that's believable for the environment. So it needs to match the acoustics of the virtual environment. How is that? Where are we on that front? It's not such a big problem compared to the computational power required by the visuals or, you know, where are we? With sound.

[Federica:] Is there a plan to add other senses or it's being done already? Like the sense of smell, or touch?

[Federica:] What about research in this field? Who is leading research? Is it academia or is it the industry? Because it seems to me that we mostly observe the next step, advancement in this field, with the latest commercial product. You mentioned Microsoft earlier on, so nobody knew about research in that field until they went public with a commercial product. So where is the most experimentation happening? Who leads this?

[Federica:] When I tried some virtual reality experience, it really triggered my imagination. It was already incredible what I could see, what I could do in that virtual world, but my imagination could go beyond that, and imagine things that may not be possible with today's technology. We'll get there. But however impressive it is, today's technology is limited, of course. Is there anything that you would like to see happen, something that you would like to do that is not currently possible?

[Federica:] Ok. So the hologram is actually going to be out there. It's not that I have a device traveling with me in my car that warns me about the hologram or something I wear that lets me see the hologram. The hologram is out there.

[Federica:] A-ha. How would it work from the user's perspective? How much gear do we need? Or it's in the environment? Do we need to equip the environment? We need to rewire the entire world? So, I don't even know how a hologram works. Actually, can we have this?

[Federica:] Or you bio-hack your eyes!

[Federica:] So, to make this podcast episode interesting in ten years from now, let's take stock of the situation. So, for example, about a mixed reality, what can we do today? Can you put a plant on a table?

[Federica:] Okay. And can you do the cat trick?

[Federica:] All right. And can you make some object that I can interact with, like piano?

[Federica:] Yeah.

[Federica:] Ok, so it sounds to me like the main problem is just accuracy, so there's not much you can't do. You can make a cat, but maybe sometimes it will just go through a wall.

[Federica:] How big a deal is changing the environment? I mean, I scan a room and then I know it. But what if I move a chair? Then that's a different environment. Do I have to re-scan the entire room? Is the system intelligent enough to recognize certain objects or even people during the scan? Or you need to vacate the room when you're scanning it?

[Federica:] I've already volunteered some of my fingerprints to Apple because I need to unlock my smartphone and computer. But will the data that one day is shared about me include how my house looks from the inside?

[Federica:] Well, this raises interesting questions. Well, this is probably a completely different podcast episode, but with the potential of this type of technology for the future, we also get ethical questions, indeed, about the use that we could make of it. "With great power comes great responsibility," right? So, speaking of getting to know and to learn this technology, you said that you were a gamer. You got interested at a very young age and that you just started doing it. But how difficult is it? If a kid at home right now, like you a couple of years ago, is interested in this and wants to try to play with virtual reality or mixed reality. I mean, do you need a degree in engineering just to start doing this? Or there are tools and ways that make the learning curve not so daunting?

[Federica:] So this will not be the reason that finally gets young kids interested in the math class in school? You can actually start playing with mixed reality without being an engineer? So you don't need to know the math behind this things? There are levels of abstraction built in this programming tools, programming environments, that make it unnecessary to actually know the math?

[Federica:] I could make my own pretty cat that walks right through a wall.

[Federica:] Maybe! Ok. So, we said that you can make a plant. We can have a ball that bounces on the floor, and we can have a cat. Now, a cat, even in the real world, is something different than a plant or a ball because it has agency. It's a living thing. So it decides to move around. And as long as it interacts with me, that's fine. But what if it interacted with other virtual cats? Say, a social interaction among synthetic entities. Is that possible? I would say, is there enough intelligence built into these cats for them to interact among themselves?

[Federica:] No.

[Federica:] Since when you've been involved with this things, has the scene already changed significantly? I mean, you started as a young kid, but you're still young.... so I guess this has not been more than 10 years. So during this years, has the scene already changed a lot?

[Federica:] So you're the co-founder of the competence center Roger Roll. How did this come to be? What led to this company?

[Federica:] From what you've been saying, I gather that if you're good, there's plenty of opportunities in this field right now. And speaking of training and getting good, you have spent a summer or semester at MIT, with a training program. What did you learn there?

[Federica:] Satellites like... large regions? Or the swimming pool in the backyard?

[Federica:] You know, what kind of data were you extracting from satellite images. There's different [types]. What was the focus at the course?

[Federica:] Is there something that you think we will see in our lifetime - you're a bit younger than I, so that you will see in your lifetime for sure... that we don't have now? But it's not just the next step, something we can't do with mixed reality today and we'll be able to do it in 10 years. But really a vision for the future. In 50 years from now, if the world looked like a different place, why would that be? What technology would we have? I know it's a huge question, but do you have a vision about that?

[Federica:] Spice up your life a little bit.

[Federica:] Ok. As a developer, as someone who is heavily, professionally and by passion, involved with this, do you already spend a lot of time in this other worlds? You spend your time coding, you spend your time testing, so at the end of the day, your everyday life is already futuristic compared to that of any of us. Would you say?

[Federica:] Yeah, bummer. This is the real world. It's boring!

[Federica:] Well, now it's time for the big question. Can I try this? Can you let me try these Hololens right here?

[Federica:] Ok. First, let me thank you for being on Technoculture.

[Federica:] And now let's have some fun!

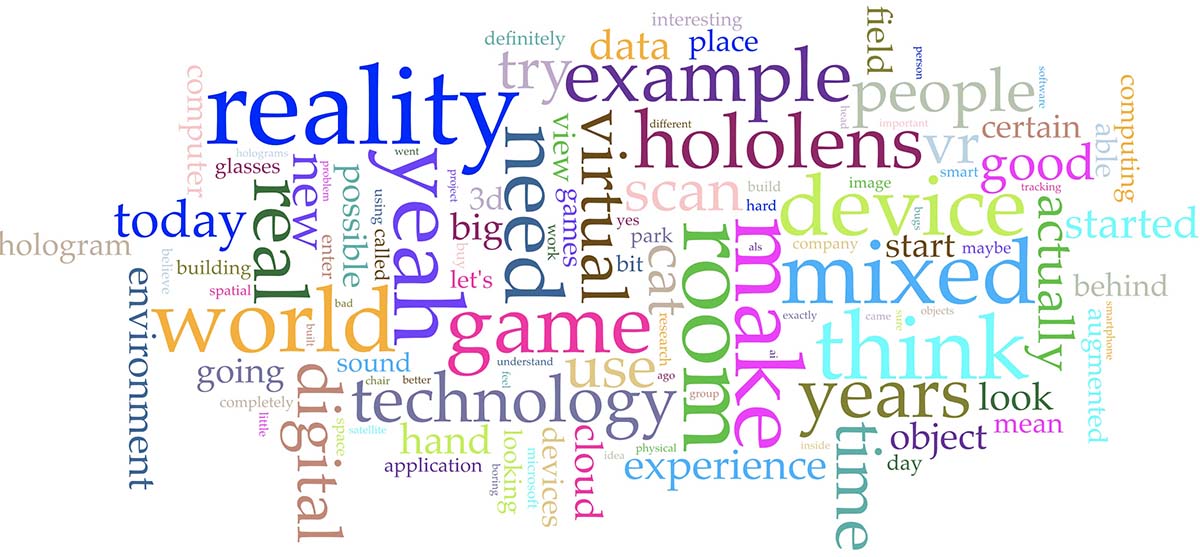

Go to interactive wordcloud (you can choose the number of words and see how many times they occur).

Episode transcript

Download full transcript in PDF (119.67 kB).

Host: Federica Bressan [Federica]

Guest: Ward Peeters [Ward]

[Federica]: Welcome to a new episode of Technoculture. I'm your host, Federica Bressan, and today my guest is Ward Peeters, co-founder and managing partner at Roger Roll in the Antwerp area in Belgium. Roger Roll is a dynamic competence center that focuses on advising, guiding and developing experiences in spatial computing. Welcome, Ward.

[Ward]: Hello, thank you.

[Federica]: Today's topic is mixed reality. We also talk about virtual reality a little bit, but I understand that both these are subsets of spatial computing. So maybe a good place to start is to ask you, what is spatial computing?

[Ward]: Yeah, so special computing is when a computer, software and hardware, will map and understand the real world, so it will use camera technology or sensor technology to map the real world, to map physical surfaces, and then it will use software to understand it. For example, you can try to figure out what type of room it is in or how big the room is.

[Federica]: So we are talking about artificial intelligence?

[Ward]: Yes and no, depending on which device is being used. If you have a device that has, for example, an infrared camera, it might not necessarily need artificial intelligence to figure out how big the room is, but if you have a smartphone camera on your phone and you're using that normal camera, and the normal camera records the room, films the room, you will need an AI or a computer vision software to figure out what items are, for example, in the room.

[Federica]: Some say that virtual reality had like a spike, was very popular in the '90s, and then it disappeared, and now it's sort of coming back, but these people are not excited about it. They don't believe that it will last. Do you believe that this is actually the start of something, so this is the future now?

[Ward]: Yeah, especially in the case of virtual reality, you're right that, indeed, there were some devices already in the '90s in virtual reality, but the problem there was that these devices were too big, too bulky to put on your head and to really enjoy a virtual experience. And today, now that we have advanced in technology as much, the devices can be much smaller, chips are much smaller, their performance is really higher, so that means that the device is smaller, but the virtual world, everything that you're seeing, is more crispy and looks better. And I think that's why that today these technologies will find a lot of really interesting use cases, because the hardware advantage, that has changed towards the years.

[Federica]: What kind of realities are we talking about? How many do we have, and how do you define 'reality' in this context?

[Ward]: Yeah, so in spatial computing, we like to use three different terms to define it. You have the virtual reality where you're completely in a virtual world immersed in another virtual space. Then you have augmented reality where you would take the real world and overlay it with a digital object. And then you can enter a mixed reality (which is where we are most focused on) is when you have an augmented reality object (so you overlay the real world), but then you use sensor technology to place that object somewhere where it makes sense in the real world. I will give you an example of a game, let's say Pokemon. So a virtual reality Pokemon game would be, you put on the headset and you enter the world of Pokemons, completely 3D immersed around you, everything is virtual. And in augmented reality, as you might have heard of Pokemon Go, this popular game where you go out into a park and you can hunt Pokemons on your smartphone, but the Pokemon, you will see it in the park, but it won't interact with the park. And that's where we enter mixed reality, where you can hunt down Pokemons in the park, but they will, you know, be able to hide behind a tree, or if there's a fountain, they will swim in the water of the fountain. So the augmented objects, the digital objects, they are immersed in the physical space in the real world, and that's what we call mixed reality, and it's where we are very focused on.

[Federica]: So do I understand correctly that in an augmented reality application, the system could overlay digital object on any background (it doesn't know the environment), whereas in mixed reality, the same object is overlaid on a space that is known, so the application knows the environment, and this has consequences?

[Ward]: Exactly. That's actually a really nice way to put it, yeah. That's exactly what it is.

[Federica]: And besides gaming, what other applications are there for this technology?

[Ward]: Well, right now we see a lot of use cases in training purposes. For all of these three categories of realities, we see that training is very useful. I could give you, again, an example of each reality. So for virtual reality, you can place someone in the space that is very dangerous, that has a lot of hazards, and you can train that person in that virtual space. For example, a fireman who has to enter a building, you can have him enter a building and see the building on the inside how it's burning and then have that experience and see, 'Okay, how do I react on this simulation,' whereas you would put them in a real building, that might have some safety issues. And then for augmented reality is useful when you want to overlay information on certain things, so, for example, you're looking at a machine in a factory and it can give you all the data that is streaming from the machine, what it's building, how much power it's consuming, like overlaying this extra layer of digital data. And then enter mixed reality where there are so many possibilities that it really gets really confusing towards what is now useful and not useful. For example, I can give you an example of a project that we're working on right now. It's called SARA project. It's an imec.icon project, and it's about helping neurologists to perform brain surgery using smart glasses, and that's about it that I can tell you about it, I think.

[Federica]: No Pokemons involved.

[Ward]: No, no Pokemons there.

[Federica]: When I watch the videos that you share online or other people share online, I'm always amazed by what already exists, by what I see demonstrated in these videos. So can you tell us, where are we, exactly? I mean, what are the current challenges in this field? Is it the tracking of my hand, or is it the resolution of the objects? Where are we and where are we going next?

[Ward]: So indeed, the technology is very new, especially mixed reality, so augmented and virtual, they're already a little bit older technologies. Mixed reality is very new because it was introduced in 2016 when Microsoft suddenly showed up on the stage with their amazing Hololens device, which is laying on the table here next to us, and that opened up this idea of mixed reality. So yeah, it's very new technology, and it's really hard to talk about it because it's so new. And on one hand, people expect a lot of things from it like it's going to, you know, change the world forever, but on the other hand, there are a lot of hardware disadvantages that are still, need to be solved, really, really hard questions that, you know, engineers need to solve in the actual device. And for me, I think one of the most important points here is the field of view of these devices. So the field of view is how you can measure how much holograms, so how much augmented digital object you can see through the glasses, and compared to how much you can see, so your own view. So in these devices, you will have a certain area in your field of view where the hologram would be, but it's not taking your whole view. And right now for the Hololens, it's 35 degrees, so in 35 degrees, there's a rectangle square in where you see the holograms, and in the new Hololens, it's up to 60, almost 60 degrees, so they double that with a newer device, but I hope to see... A full field of view device is 108 degrees, so the hologram can be everywhere around and you can see them all over your field of view. It's hard to explain.

[Federica]: What do you mean that mixed reality started when Microsoft came out with that device? Haven't we seen the concept before in sci-fi movies when holograms are floating around the room and they do not clash with objects, so they know the room, and Tony Stark technology, floating around the room, also? So how has mixed reality started with Microsoft?

[Ward]: Yeah, I think conceptually and in art, and in that world of conceptualizing things, I'm convinced that mixed reality has been around for a very long time. I think even in Star Wars movies, you can see these holograms talking to you, but when I say it started when Microsoft brought out these devices, that of that visual effects then that you're seeing, because they're visual effects, they certainly are real, so they certainly are really possible, and there's glasses that can actually show you those holograms in your real room.

[Federica]: So the science fiction became possible.

[Ward]: Yeah.

[Federica]: How did you start in this field? Were you passionate about sci-fi? Were you dreaming about these types of things too?

[Ward]: Oh, yeah. [chuckles] Yeah. So I basically grew up watching Star Wars. I love Star Wars. I love sci-fi. I'm a gamer, so I game a lot. Maybe I used to game a lot more than I do now because I don't have time for it, so that's sad, but I got very interested in gaming, and I started to make my own games, so I started to learn what's behind the game, because there's so much more than what you're actually looking at in a game. There's so much behind it, so much mechanics, code, and 3D models, and renderings, and there's a lot of things going on, and I was really fascinated by that. And when I saw this video where Microsoft is livestreaming their new conference, I always watch these livestreams from the conferences, suddenly there's this Hololens device and they showed one of my popular games is called Minecraft, and they showed it in the Hololens, and that was enough to convince me that I needed to work with this technology.

[Federica]: All right. These things are so new that sometimes you really need to see them to understand what we're talking about. Are there good demos, videos out there, material that I could also link to this podcast episode for our listeners?

[Ward]: Yes. Well, the issue is that it's basically, it's a new medium, a new way of visualizing digital content, and the best way to see it is to use the device. So you can use a computer like a 2D screen to look at it. It will give you an idea of the experience that the people are having, but when you have a device and you try it, it will give you a totally different experience when you see it for yourself.

[Federica]: If someone like me or a regular person wants to try this technology, can we go someplace? Are there shops, centers, something like that, where we can try it, or it's not so accessible?

[Ward]: It depends. So in the three categories of realities, if we start again with virtual reality, virtual reality is quite common today. You can buy it easily in MediaMarkt or in any supermarket. You can buy a VR headset, you can go home, and you can try it. And there are also some VR arcades in Brussels. I know one, I think it's called Livescope, for example, where you go and then for an hour you pay a certain price, and then you can play VR games and you can experience it with a group of people over the company. So VR is definitely very accessible. Now, there's a little bit of a problem where you have the content of VR, so that's very important. What are you playing? Because some games, they will give you really bad experience, but that's, I guess it's with every medium. But, for example, in the beginning of VR, that was a big idea that rollercoasters would be an amazing idea for VR, so you put on the headset and you enter a digital rollercoaster, and you start riding, and I think a lot of people that try VR for the first time and they tried a rollercoaster, they will say, 'I will never try VR again because it made me sick, and it doesn't look good, and it's not working.' But today, there are really a lot of applications that you can try out, and some of them are really good, some of them are really bad. It's hard to find the right application to try out.

[Federica]: I'm a bit of a VR freak. I have tried it anywhere I've seen it, you know, open to the public, and oftentimes I see this disclaimer or warning, actually, that if you have certain conditions, you shouldn't use this application and that you might feel dizzy, and I've heard that that's normally related to the resolution of the images. Do you think that that's the case, resolution is really a critical factor, and that's what can make you dizzy, except virtual reality application of rollercoasters, then you'd be sick even in the real world, I guess?

[Ward]: Yeah, so you need to, as a developer, right, you need to hit a certain frames per second in your application. So the best thing, what every VR app should aim for, is 64 frames per second, so that means that your field, your screen is refreshing at 60 frames a second, and then when you start shaking your head around, it will move fast enough for your brain to think, 'Okay, I'm moving my head, and I see the image moving.' That's good, but when you move your head around and things are moving slower than you're moving your head, you will definitely get nauseous. And that was, with a lot of the older VR headsets, a big problem because they were just not able to render at 64 frames per second, and definitely not when you throw in a really high detailed environment, the headset will not be able to render it that quickly, and then it will make you nauseous.

[Federica]: So say I could try an augmented reality application on my phone and virtual reality, it's possible. What about mixed reality? Is this the least accessible?

[Ward]: So mixed reality is, I think, the most interesting one. It's also the newest type of technology, and it's the least accessible technology, because the devices you have right now in the market, they're very expensive, and they don't have a lot of content on them to really enjoy something. So it's really meant for these early developers and, you know, early technologists, startups, to try them out and to build something or to experience something with it, but it will take a couple more years before you actually walk into a store and you buy mixed reality glasses, you know.

[Federica]: What about sound? Sound has a great capability of bringing a scene to life, but sound needs to behave in a way that's believable for the environment, so it needs to match the acoustics of the virtual environment. How is that, where are we on that front? It's not such a big problem compared to the computational power required by the visuals, or, you know, where are we with sound?

[Ward]: Well, sound is very important as well. I think it's as important as visuals because what you try to do when you give, or when you're building a VR or AR, MR experience, you're trying to mimic the real world as much to make you feel like what you're looking at is real, and the better you do that, the more awesome the experience or the more you believe that there's actually a hologram running around in your room. And sound is one of the ways to convince a person that what they're looking at is real. So, for example, visually you can try to create this hologram to look like, let's say, an animal like a cat, for example. You can try to make it, the 3D model, as convincing as possible as a cat. You can make the shadows, animations, and everything that it really looks like a cat, but when it doesn't sound like a cat, you will still not be convinced that it's a cat. Right? And another interesting thing would be that definitely in mixed reality, we do something called spatial audio, so we try to have the cat be in the source of the audio. So it works like a surrounding system, so when the cat would run behind you in the room, the sound would come from behind you, and you would look around to see where the cat is. And once you start experiences these things, you will start really believing that there is something in the room, even though you know it's a hologram, but because you're looking around and because you're looking under the table and you have the feeling there's something running around here, it starts to really convince you that there's something there.

[Federica]: Is there a plan to add other senses, or it's being done already, like the sense of smell or touch?

[Ward]: So touch is a big one. We call it haptic feedback, and today in VR, there's a lot of startups creating gloves where when you touch something, it will actually feel like something, or if you take your hand and you go through digital, let's say, cloth, digital pants or something, you would actually feel the vibrations going through your hand. And I went also to a conference in San Francisco, in the AWE Conference, and there they showed a startup called Ultrahaptics, and they built this board that you put on the table, and if you hover your hand over it, it will emit these sound waves on your hand and you can feel them prickling your skin a little bit. And then when you put on the headset, you're in this [labo 00:19:20] and you're creating something, and at some point, this thing spikes in your hand and you actually feel the vibration at that point where the digital object is on your hand, and it gives this extra sense and it gives, again, this extra sense like, 'Whoa, this is real, like, I'm really touching digital things.'

[Federica]: What about research in this field? Who is leading research? Is it the academia, or is it the industry? Because it seems to me that we mostly observe the next step in advancement in this field with the latest commercial product. You mentioned Microsoft earlier on, so nobody knew about research in that field until they went public with a commercial product. So where is the most experimentation happening? Who leads this?

[Ward]: I think... Well, I know that there is a lot of research being done in this technology because it is so early and there is just so much there that is, no one tried it yet. So there is a lot of opportunity and space for researching behind the hardware and software, and, you know, what if we try something else? What if we try to scan a person in 3D and then send it over the Wi-Fi to the other side of the world and then view it through mixed reality again and see that person in 3D? That's telepresence, and those things, I think, are very interesting to research. But on the other hand, you already have these new devices, and they've already shown that they can do a lot of really cool things, and they're capable of making work better or making things more time-efficient, which I think is why there's a lot of interest as well from the industry side.

[Federica]: When I tried some virtual reality experience, it really triggered my imagination. It was already incredible what I could see, what I could do in that virtual world, but my imagination could go beyond that and imagine things that may not be possible with today's technology. We'll get there, but however impressive it is, today's technology is limited, of course. Is there anything that you would like to see happen, something that you would like to do that is not currently possible?

[Ward]: I'm convinced that within 10 years, this will be the next, this is the next computer. So the next computing era is spatial computing, because we've always seen digital, the digital world, the space, everything you do online, you leave a massive digital footprint. We've always seen it as something that is so inaccessible because you need a laptop, you need a computer, or you need a smartphone, a tablet, you need a device that you have to take with you that you use to connect to the digital world. It's like another dimension, basically, and you use this technology to go there, to go to that place. But I believe that shortly, we will see those two places being connected, the physical and the digital, and this is what, really, spatial computing is about. So that means that, for example, in the case of smart glasses or even smart contact lenses, you would have access to a lot of data without really thinking about, 'Okay, I need to take my phone,' or, 'I need to connect to some server,' because everything will be connected to the real world layer. Let's think about a very concrete example. Let's talk about like traffic signs. There are indicators in the real world, physically they're there to show you something. For example, there's a road up ahead that's closed, and you will have to go in one lane. So that data is already there most of the time digitally as well, but since you're sitting in your car, you don't have the capability of getting the data digitally, so you place a real physical traffic sign. I believe in the future, you don't need to place a physical traffic sign anymore because devices will incorporate the real world and the digital world together, so the traffic sign will just be a digital object, but you're still driving a real road, but digitally you will see if there's a, if there's something ahead or not.

[Federica]: Okay, so the hologram is actually going to be out there. It's not that I have a device traveling with me in my car that warns me about the hologram, or something I wear that lets me see the hologram. The hologram is...

[Ward]: Yeah.

[Federica]: ... out there.

[Ward]: It's blended, and it's very locally blended, so it's really... It's hard to explain. It's about thinking about digital content, but not in a way that it's connected to a device, not in a way that you need a computer or you need someone, something to access it, and then you grab it, and you look down and you're seeing this webpage. It's more that it's really just completely incorporated with the real world.

[Federica]: Uh-huh. How would it work from the users' perspective? How much gear do we need, or if it's in the environment, do we need to equip the environment, we need to rewire the entire world? So I don't even know how a hologram works, actually. Can we have this?

[Ward]: Yeah, I think we can already do that today with a Hololens, for example, and if you look back just not over ten years, people will just not believe that in ten years you will have a Hololens. And I think that if we talk about in ten years, I don't really think that, 'Oh, how are we going to do it with the current technology?' because in ten years, the technology will already advanced at a certain point where you don't see the difference between smart glasses and non-smart glasses or smart contact lenses and non-smart contact lenses. You just put on your sunglasses and the digital and real world are just completely blended.

[Federica]: Or you biohack your eyes.

[Ward]: Yeah, exactly.

[Federica]: So to make this podcast episode interesting, in 10 years from now, let's take stock of the situation. So, for example, about mixed reality, what can we do today? Can you put a plant on a table?

[Ward]: Yes, you can put a plant there.

[Federica]: Okay, and can you do the cat trick?

[Ward]: Yes, I can do the cat trick.

[Federica]: All right, and can you make some object that I can interact with, like a piano?

[Ward]: You're looking for something you can't do yet, right?

[Federica]: Yeah.

[Ward]: I think that today we can visualize a lot of things. We can see the cat. We can see the piano. We can touch the piano. We can play on the piano. But it's all still very in its starting days as in, okay, you can play with the piano, but if you place your finger down, you might actually hit one of the other buttons. You might not hit the right button that you actually wanted to click on. Same with the cat, like the room is scanned, the cat will like jump around the room, but if you move too much a little bit, the cat might just go through the wall, for example. So...

[Federica]: Like bugs.

[Ward]: Yeah, exactly. There are many bugs because it's such an early tech.

[Federica]: So you can do bugs.

[Ward]: Oh, yeah, we can do bugs [chuckles], but we know where the hardware and the software of today, where that nice line lies of, 'Okay, this is possible, but if we push it too hard, it's going to not work correctly, the tracking would not be good enough or the processor just cannot render it, so let's stay just before that moment where it would get too bad that people would really see that there's something going wrong like a bug, for example.'

[Federica]: Okay, so it sounds to me like the main problem is just accuracy, so there's not much you can't do. You can make a cat, but maybe sometimes it will just go through a wall.

[Ward]: Yeah. Tracking is huge. It's very important, so the device tracks, scans the room and it uses that scan of the room to place stuff around, holograms, and if your scan has a hole in it because the texture is black, it's really hard to scan, because the light is not reflecting from the texture, from black color, then it might create a hole in your scan, and that's, for example, a big issue, but if you can increase the speed of the scanning and quality of the scan, you can definitely do more things with it.

[Federica]: How big a deal is changing the environment? I mean, I scan a room and then I know it, but what if I move a chair? Then that's a different environment. Do I have to rescan the entire room?

[Ward]: What the Hololens does, it maps, so it continuously maps, which means that if you move the chair, it will take him some time but he will notice that the chair is moved, and it will know the new location of the chair. And what you could do as well if you want to have more of a qualitative scan of the room, you can tell the user to scan the room completely, walk around it, check every corner, make sure everything is scanned nicely, and then save that scan, and then once the scan is saved, then do something with it. But in that case, if you move the chair, yeah, the scan and a chair, they're not the same anymore, so then there will be bugs in the program.

[Federica]: Is this system intelligent enough to recognize certain objects or even people during the scan, or you need to vacate the room when you're scanning it?

[Ward]: Yeah, very good question. [Microsoft 00:29:04] today is really about cloud computing, everything on the cloud, so if you would want to have a server, you want to have an AI neural network, you need to calculate something, you get it from the cloud and you have a certain license, and everything happens on the cloud, so that's definitely the future of every device is that device that you have or we have doesn't really have the capabilities to know if an object is a television or maybe just a computer screen, right, because they almost look the same, but the cloud has this capability because the cloud has done that a million times. So if you buy one in a store, you're connected to the cloud. You suddenly get all this data and all this intelligence that this cloud system has built over years of time and people have been improving it, improving it, improving it. And that's, I think, the most important part, definitely, with mixed reality because today when you buy a device, you don't really buy a device. You buy more the systems that are in the cloud that you can get with it, because the device itself, smartphone, won't bring you anything. You can't really use a smartphone out of the box. I mean, you might be able to call with it, okay, but today you do so much more things with smartphones. You have Facebook or email or other things. They're all cloud systems, and it's the same with mixed reality where your Hololens, if one Hololens was perfectly able to scan a room, and the other Hololens has never been in that room and then one day comes in the room, he will also just understand room, doesn't have to scan it again, because they're all connected to one big AI, one big cloud, which is eventually creating a whole map of the real world not just outside but also inside, so every room, every office building it enters it will know, and it will also have a lot of capabilities towards training itself to become very good, as your example, object recognition.

[Federica]: I've already volunteered some of my fingerprints to Apple because I need to unlock my smartphone and computer, but will the data that one day is shared about me include how my house looks from the inside, for example?

[Ward]: Yeah.

[Federica]: Aha, so, well, this raises interesting questions.

[Ward]: Questions towards like privacy and... Yeah. Depends how you... I know Magic Leap, the other device, they were very careful with how the scan of your room is being shared, and you really have to choose to really upload it to the cloud or not. You can really have that feature. But ultimately, I think it's more about using the data not necessarily to be able to look at a house and know the inside of the house, but more use that data to train the model to scan a house is better, but it doesn't necessarily mean that you'll be able to know everyone's home, for example. But if you want, you can share it [with another device 00:32:02] and then they can both know the person's home.

[Federica]: Well, this is probably a completely different podcast episode, but with the potential of this type of technology for the future, we also get ethical questions, indeed, of the use that we could make of it. With great power comes great responsibility, right? So speaking of getting to know and to learn this technology, you said that you were a gamer, you got interested at a very young age and that you just started doing it, but how difficult is it if a kid at home right now, like you a couple of years ago, is interested in this and wants to try to play with virtual reality or mixed reality? I mean, do you need a degree in engineering just to start doing this, or there are tools and ways that make the learning curve not so daunting?

[Ward]: I learned using, to program for mixed reality and make these experiences by making my own little 3D games, so from the moment that you are able to make a simple game in 3D and you have some skills of how to make something move in the game and how, when you touch something, you know, make it do something, or when you click on something, I mean, it's already in my head, it's already touching things. [I'm not 00:33:33] thinking anymore about clicking on things, but once you have the skills to make a simple game where you have interaction, you are ready to make a mixed reality game where you enter the room and use the environment to make your game. So it's the Unity gaming engine is a software, it's open source, it's free, [it still has 00:33:52] certain capabilities, and that's definitely something you can pretty easy learn out of the box, and it's the really recommended program to work with for these type of devices. So it's not rocket science. We sell it like rocket science, maybe. [chuckles] It's not rocket science to develop with it, but there's obviously, you know, skill gap where at a certain moment, the quality of how good you are represents what you're making. Right?

[Federica]: So this will not be the reason that finally gets young kids interested in the math class in school. You can actually start playing with mixed reality without being an engineer, so you don't need to know the math behind these things?

[Ward]: That's true. I think it depends on what you want to achieve. I think if you want to make a better device than the Hololens and you want to increase the tracking of the sensors, or you want to make sure the hand tracking is more accurate, you will go really into these hand tracking models, and then you would really need advanced knowledge of them, and of coding, and of math, and physics, but if you're there to create a very simple experience to bounce a ball around the room, I mean, yeah, that's to be honest, it's quite easy to do.

[Federica]: I could make my own pretty cat that walks right through a wall.

[Ward]: Yes. You can also make one that doesn't walk through the wall.

[Federica]: Maybe. Okay, so we said that you can make a plant, we can have a ball that bounces on the floor, and we can have a cat. Now, a cat even in the real world is something different than a plant or a ball because it has agency, it's a living thing, so it decides to move around, and as long as it interacts with me, that's fine, but what if it interacted with other virtual cats, say, social interaction among synthetic entities? Is that possible?

[Ward]: Yes.

[Federica]: I would say, is there enough intelligence built into these cats for them to interact among themselves?

[Ward]: You can look at it this way. So you know Rollercoaster Tycoon?

[Federica]: No.

[Ward]: It's a game I think came out on Windows maybe 20 years ago. It's quite an old game, and it's very advanced. You can make a whole theme park, and you can have people coming to your park, and you have to make, build attractions and you have to try to make a lot of money with people coming to the park, and these people are really interactive. They will interact with the shops and with other people and so on and so on, and this game was made a long time ago. And how you have to see it is that the world where these guys, the ground that they walk on, is chosen 3D model of a plain floor, but today with mixed reality, that ground becomes, the scan of the device becomes your real room, can become the floor, but everything next to that theme-park building and the guys interacting with each other is, in the game engine, is perfectly possible because you basically replace the environment with the real world, but all the interactions that games have (and modern games have a lot of interactions), they're all possible in mixed reality. The only main issue there is, I think, is the processing, processor of the device that allows you not to view very high qualitative 3D models. There's this other game, Zoo Tycoon, also from Rollercoaster Tycoon, where you have to build a zoo, and you have animals, and interact with each other. You can perfectly build a Zoo Tycoon game in a Hololens where you use your room and you can place animals and have animals in your room and are real size, you give them food and everything. It's perfectly possible today because it just uses the same ideas and code and mechanics behind a modern game, but the environment is replaced by the real world.

[Federica]: Since when you've been involved with these things, has the scene already changed significantly? I mean, you started as a young kid, but you're still young, so I guess this has not been more than 10 years, so during these years, has the scene already changed a lot?

[Ward]: Yes. I think I started coding, I was probably, I think, 14 or 15. So we had this game called Minecraft, and me and two friends, we decided to build a server where other people could join in your world and you could create stuff together, and then we started to change the game. So it was Java-based, it was open source, so we started to change the game and make new rules in the game and apply them to the people online playing, and we kind of created this massive community and YouTube channel, everything around it. And I think that's where it really started for me. And when we went to college, we didn't have enough time to keep on doing it, but I still enjoyed the mechanics of how to make a game, so I just started to make a few of my own games, and then I made some VR games because VR was already quite old to say, right? I think I made my first VR game when I was on Erasmus in Copenhagen, or I made one before it... I'm sorry. I think it was about four or five years ago, but there was no Oculus or no HTC Vive, there was just the Cardboard, so you slide your phone into Cardboard, and then you have this idea of a VR game. And I was really excited about that, so I made this whole shooter in there. It was really fast, actually, and then when, yeah, mixed reality came out and Hololens came out, I was just focused on that. So I don't know where it's really started. I guess my Unity experience is about five years old, I think, which is a program that I use to build these experiences, but I really believe in time as something to use how long you're doing something, because for one person, you can work on something for more than 10 years, but you can work on something for more than 10 years. So for me, when I saw Hololens 3 years ago, I didn't do anything else. It was just my everyday life was Hololens and concepts and how to build things for it, so I really specialized in it, and it was really just what I want to do.

[Federica]: You're the co-founder of the competence center Roger Roll. How did this come to be? What led to this company?

[Ward]: With Hololens, with mixed reality, so not with VR, but mixed reality. It really started when I was in my second year of my bachelor in 2016, and that's when they showed the device for the first time, and I thought, 'I need to have it,' and they said it's only for North America. And I thought, 'Well, I need to find a way to have it, because it looks amazing.' So I went, the first thing I did was, I went to The Khronos Group just because I knew it was really a large group, a lot of companies are inside of it, and I thought, 'They must have the network to get this device,' and I was right. So I got an internship at The Khronos Group at a company called Continuous, and they got the first Hololens in Belgium. And then I built some apps for them on the Hololens, and then at the same time I got contacted by another company called Collibra (they're a data science company). And then I did my final thesis of, college thesis, with them, and I was allowed to present it in New York in their conference. I was really happy about that. And then when I graduated, I decided to start eenmanszaak, which is a sole proprietorship, and one man's company in Belgium, to keep on developing for Hololens. So I got some job offers already at that time from companies that I built Hololens apps before, but I thought, 'I really wanted to do this on my own,' and then I did a few projects, and then I decided to also do research, and then I worked 50% also for the college in Brussels as research behind AR, and VR, and MR, and I did that for a year. And then at some point I got contacted again by The Khronos Group and by [unclear 00:42:19]. He's sitting behind us right now, and he told me, 'Look, Ward, I've been following you for the last two years since Hololens came out. Do you want to start a company inside The Khronos Group just to do this type of development?' And I was like, 'Yeah, sure, let's do it,' and then yeah, we're in the process.

[Federica]: From what you've been saying, I gather that if you're good, there's plenty of opportunities in this field right now.

[Ward]: Yeah, for sure.

[Federica]: And speaking of training and getting good, you have spent a summer or semester at MIT in a training program. What did you learn there?

[Ward]: So the course, it was imaging. It was all about AI and computer vision, and what we learned was a summary of all the things that were going on at MIT at that time around cameras and computer vision, very interesting things. Yeah, I think a lot of projects were about satellite images and trying to get data out of a satellite image.

[Federica]: Satellites like large regions or the swimming pool in the backyard?

[Ward]: The [unclear 00:43:35] itself or...

[Federica]: Yeah, what kind of data were you extracting from satellite images?

[Ward]: So one project that they're working on which is really cool was using satellite image to determine population, so you could take a satellite image of Antwerp, and it could tell you how many people live in Antwerp. That was a project we're doing because in some remote regions in Africa, you don't have exactly numbers of how many people live in those regions, and when disaster strikes, that's very useful information, and then they could get that information out of satellite pictures. Another one was using the Google Street View images of streets and give them a score in how the neighborhood has developed, so you could take a neighborhood in Antwerp, and the AI system will have fun on 10 years of pictures (because Google, they keep all the pictures that they always take) and it will then give you a score, and if the neighborhood was developed really good over these years or if it has deteriorated over those years. But yeah, a lot about imaging, using AI, facial recognition, getting data out of just a plain image and going as far as knowing if an object is close or far away to, you know, knowing how many people are in an image, who is in the image, things like that.

[Federica]: Is there something that you think we will see in our lifetime you're a bit younger than I, so that you will see in your lifetime, for sure that we don't have now, but it's not just the next step, something we can't do with mixed reality today and we'll be able to do it in 10 years, but really a vision for the future? In 50 years from now, if the world looked like a different place, why would that be? What technology would we have? I know it's a huge question, but do you have a vision about that?

[Ward]: Apart from mixed reality, I'm very interested in self-driving cars. I think Peter Hinssen, he said a statement, 'In 2030, you will not be allowed to drive a car anymore,' and I love that statement, and I hope it's true. But apart from self-driving cars, one thing that I found very interesting is being able to really blend digital and physical worlds together. It's really something I almost, like, fantasize about. It's the idea of, okay, you have to get some milk or you have to go to the bakery, and you do that every day, every morning, and it's boring, but what if you go in that bakery and you have your glasses on and you're now, you know, Indiana Jones in the jungle, and your whole experience just completely blended physically and digitally? And I think that would be awesome.

[Federica]: Spice up your life a little bit.

[Ward]: [chuckles] Yeah.

[Federica]: Okay. As a developer, as someone who is heavily, professionally, and by passion involved with this, do you already spend a lot of time in these other worlds? So you spend your time coding, you spend your time testing, so at the end of the day, your everyday life is already futuristic compared to that of any of us, would you say?

[Ward]: I live very much in the digital world, that's true. When we're working on a certain project for Hololens, we spend quite a lot of time in the Hololens with the Hololens on because, yeah, you have to test things. And I remember there was this one time when I was still a student and I was working on an application, and that day, I was really... I wanted to make it visually looking very good so I had to test it a lot, and at the end of the day, I put the device off, and I realized I was just in this really bad-looking empty office, because it was, the thing that we... It was a temporary office. It was in [unclear 00:47:35]. I'm not going to say which one it was, but we were there, and it was really these grey walls, there was just nothing there. There was no color. It was just so boring. And I went in there in the morning, I was a bit tired, and I put on the device and I had a good time the whole day seeing the 3D objects and making them really look good, how I want them to look, and at the end of the day I put the device off and I was like, 'Man, this room really looks bad. I should probably open the blinds.' You know?

[Federica]: 'Bummer. This is the real world.'

[Ward]: Boring. It's just boring.

[Federica]: 'It's boring.' Well, now it's time for the big question. Can I try this? Can you let me try this Hololens right here?

[Ward]: Sure, I'll show you. I have to power it on, and hopefully somebody charged it.

[Federica]: Okay. First, let me thank you for being on Technoculture.

[Ward]: Yeah, no problem. Thank you for having me.

[Federica]: And now let's have some fun. Thank you for listening to Technoculture. Check out more episodes at technoculture-podcast.com, or visit our Facebook page @technoculturepodcast and our Twitter account, hashtag Technoculturepodcast.

Page created: August 2019

Last updated: July 2021